Is BigQuery part of your data stack? If yes, this tutorial will guide you on how to set up your automated data pipelines. Google has great support for it’s own products but in 99% of all cases, you have other platforms on your marketing stack. In this article/youtube tutorial we will guide you how to stream all your analytics, CRM and media data into BigQuery. The currently supported platforms for this tutorial are:

Prerequisites before getting started

- Access to all the channels you would like to integrate

- Google Cloud account with BigQuery

There are a few steps involved to get your data pipelines set up

- Connecting your data

- Streaming of the data into BigQuery

- Option 1: Manual upload

- Option 2: Automated data streaming

The main focus of this article will be around setting up data pipelines to BigQuery.

Let’s get started.

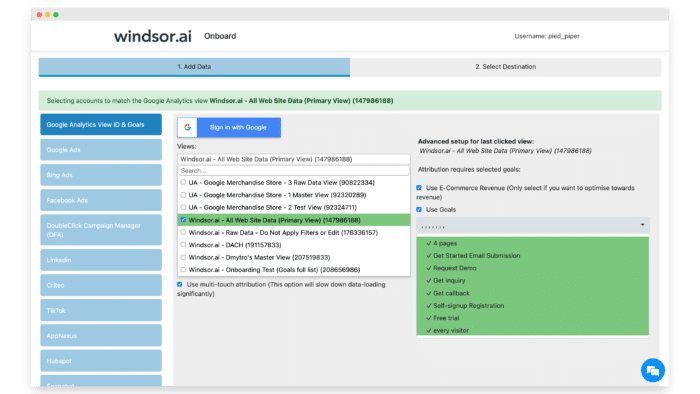

Connecting your data

To begin with we have to connect our data to a ETL platform. In this example we’ll use our platform where I connected all the data of our channels.

Of course you can add channels as you like (see the table at the beginning of this article for more details).

To get started with connecting your data simply head to our setup page and sign up for a trial to see it in action.

The platform is self explanatory and requires only a few clicks. If you think it’s too complex you can check out which buttons to click here.

Try Windsor.ai today

Access all your data from your favorite sources in one place.

Get started for free with a 30 - day trial.

Getting your data into BigQuery

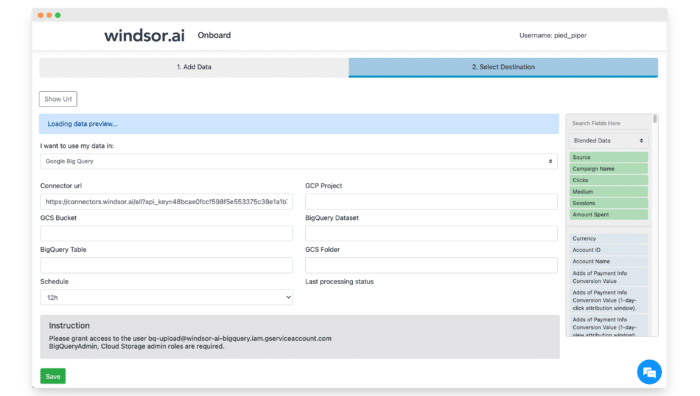

And for this, we have two options. Option one is the manual option, which is a manual JSON upload, meaning we upload a file manually via export and import. And option two is the automated data upload. I will quickly show option one. But I guess if you want to automate your data pipelines, you would rather focus on option two. So let’s be quick with option one.

Requirements for Option 2:

1. Connect your analytics, media, CRM data (or any other source you want, we support many)

2. Setup data streaming to your BigQuery Table (Don’t forget to grant access to bq-upload@windsor-ai-bigquery.iam.gserviceaccount.com)

Make sure you follow the instructions on the screen. Once you complete the setup your data will start streaming in the interval you specify. You can always come back and change the settings at a later stage.

Bonus (I did not cover this in the video)

Do you want to fetch data from the connectors directly? You can try get additional fields which are not in the standard table definitions (such as custom dimensions, metrics and additional media metrics). You can start building your connector queries here.

Did you like this article or do you have any questions? We would love to have your feedback. Just contact us on the chat!

You might also be interested in…

Connect Google Search Console (GSC) to BigQuery

Connect Snapchat Ads to BigQuery

Connect Pinterest Ads to BigQuery