CONNECTING all marketing data

See the value and ROAS of every

marketing touchpoint

Multi-touch marketing attribution software

Get all your marketing data and metrics into any tool

WHAT Our Clients Say

Cross media optimisations

OPTIMIZE Marketing ROI for all channels

Connecting all marketing data with our marketing attribution software platform give marketers an increase in marketing ROI of 15-44%. Measure ROI for every channel, campaign, keyword and creative. Take into account cancellations, returns and offline conversions from CRM.

Save time and automate

Automate collecting all marketing data and minimize time spent on preparing and formatting. Focus instead on insights. With our advanced marketing attribution software, you can easily use all your data where and when you need. With our connectors and API’s its easy to consume the data in any tool.

Optimize Marketing with Multi-touch Attribution Modelling Software

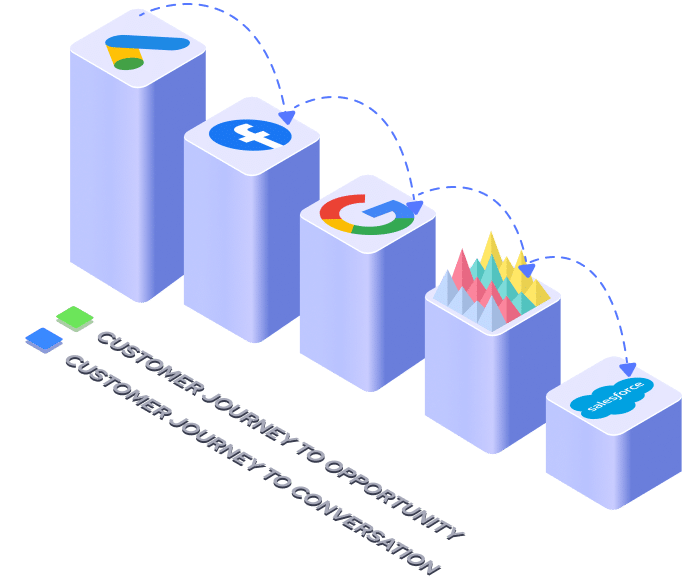

Analyze and optimize customer journeys across all touch-points. Our marketing attribution software and budget optimiser helps you save time when optimising budgets. Optimisations can be sent directly back to the platforms.

Data that can be joined to enrich customer journeys:

‧ Cross-device data, eg. Criteo, Tapad etc.

‧ CRM data for offline conversions, returns and cancellations.

‧ TV and offline campaign data.

Attribution insights empowers advertisers and marketers to make data-driven decisions on marketing budget allocations across the entire customer journey. Customer journey data is matched to cost-data, this together with multi-touch attribution enables a ROAS for all touchpoints.

Integrations

VISUALISE, use and stream to any tool

You can use and visualise the data in any tool, also R and Python.

We have both native connectors and API’s.

Integrations

OPTIMISE for CLV from CRM data

We have native connectors to all widely used marketing platforms and CRM’s. We also make it easy to do transformations to match the customer journeys from the different tools easily.